Durable Entities Enable Shared State Across Orchestration Instances

I’ve written about code-based workflows using Durable Functions earlier. Durable Functions is an extension to Azure Functions that I’ve grown to appreciate more and more. In version 2.0 of Durable Functions, Microsoft introduced Durable Entities as a third kind of function in Durable Functions.

This article describes a real-world use case for Durable Entities. In this use case, I’m sharing data between multiple orchestrations in Durable Functions.

What Problem Did I Solve With Durable Entities?

Every solution always needs a problem. Otherwise it would not be a solution, would it? What was my problem that I solved with Durable Entities then?

I’m currently working on a product that, among other things, automates Azure AD user processing. This processing includes steps that, when performed at scale, can be very laborious and error prone if done manually. Changes that require reprocessing a large number of users can occur quite frequently.

So I created an orchestration function with Durable Functions that takes care of processing a single user. The orchestration just needs the ID of the user. The rest of the information the orchestration needs it reads from configuration and a few other data sources.

To learn more about how to leverage Dependency Injection in Azure Functions applications, see my earlier article. That article covers configuration, among other things.

The problem with that approach is that when an orchestration triggers for a user, a previous orchestration instance for the same user may often still be running. This is especially likely if the previous orchestration has encountered errors when calling child functions with retry policies. But sometimes it can just take a while for the processing to complete, and a second instance can be triggered for the same user before the previous has completed. This can very easily create a conflict between the two instances.

Solution #1 – Rejected

To tackle this problem, I thought I’d use fixed instance IDs, like MyOrchestration-{UserId}, and then terminate a previous instance before starting a new one, if one was running. The problem with this approach turned out to be the time it took to terminate the instances. Sometimes it took several minutes to actually terminate a running orchestration. When you call the TerminateAsync method to terminate an instance, the instance is not terminated, but only queued for termination. In my local development environment it often took several minutes for the instance to actually terminate. You need to wait for the termination to complete before you start a new instance with the same instance ID. Otherwise starting a new instance will fail.

In my case, one of the changes that may trigger this orchestration is a call to a REST API. This is one of the areas where Durable Functions are very handy. When you receive an HTTP API call, you can just kick off a new orchestration instance, and return the status code 202 (Accepted). That would not take too long, and your API client would be happy.

But having the API client wait for a potential termination of a previous instance was going to be too much, I though. Sure, I could have wrapped everything in a another orchestration that would have first taken care of the potential termination, and then fire a new instance. However, that started to feel very cludgy.

This is why I eventually ended up rejecting this solution.

Solution #2 – Approved

I then started to lean towards using Durable Entities to keep track of which orchestration is the latest. For every user that will be processed, I create a new Durable Entity that is keyed for the user. A Durable Entity is identified with two values; the entity name and the entity key.

So I just created a Durable Entity called “UserProcessing” and used the user’s directory object ID as key. In the orchestration function that takes care of this user processing, I then simply create a reference to that entity instance. If the entity does not exist, a new entity is created automatically. Then, I communicate with that entity using the methods defined on the entity. In this case, the main responsibility of this entity is to keep track of which orchestration initialized it last. This information I then use to check whether the running orchestration is the orchestration that initialized the entity.

This allows me to check whether another orchestration has been started for the same user after the currently running orchestration. If so, I won’t do any processing in the current orchestration. Instead, I will rely on the fact that there is a more “fresh” orchestration handling the user processing for a selected user. The entity for each user keeps track of the orchestration ID of the orchestration that initialized it. The entity is then later used in the orchestration to check whether the running orchestration is still the current.

Solution Benefits

Comparing to the rejected solution above, this is a much faster solution. Calling into a Durable Entity is just like calling an activity function. And the Durable Functions runtime guarantees that calls into Durable Entities are serialized. So there won’t be any conflicts due to simultaneous calls.

Of course there are some downsides to this solution too. The first that comes to mind is that in my orchestration function, I need to use the entity to check whether I can still keep on running. However, that is in my mind a very small downside when comparing with the upsides. But still, one that you need to consider if you would like to go for a similar solution.

Source Code

To help you getting started with a similar solution, I created a repository in GitHub that demonstrates how this would work. You find the source code here. The source code is quite well commented, so you should not have any trouble getting the hang of it quite quickly. I’ve also kept it as simple as possible, and keep the “moving parts” at a minimum.

Please be sure to also check out this article I wrote earlier about writing applications with Azure Functions. All of those things apply also to Durable Functions and Durable Entities.

How to Create and Use an Entity Function?

There are many ways to create Entity Functions. You can create Entity Functions with JavaScript directly on the Azure Management Portal. But, since I’m a C#-kind-of-a-guy, I have gone with the class approach. Remember also that not all features are available to you if you create your Entity Functions with JavaScript.

So, to create an Entity Function, you need the following two main things.

- A function with an entity trigger

- A class representing the entity

I’ll describe these in more detail in the chapters below.

The Entity Function

The simplest form of an Entity Function is just like any other Durable Function, but with an entity trigger. The function then just defines the type that represents the entity associated with the Entity Function.

Check out the Entity Function in my sample code.

The Entity Class

The second part is the actual class that represents the entity. There are a few requirements that an entity class must meet.

- The class must have a public constructor. You can specify parameters on the constructor, but generally, I would go for an approach that only specifies a default constructor on an entity class.

- The class must be serializable to JSON. This means that any state you want to keep in an entity must be stored in a property or field that can be serialized to JSON with the Json.NET library. I would recommend using public read-write properties.

- Any operation defined on the entity (method) must have at most only one argument, and cannot specify generic type arguments. The method must not have any overloads. If an operation returns a value, it must be

Task, orTask<T>, or it must be JSON serializable. - Operation arguments and return values must also be JSON serializable, just like the entity class itself.

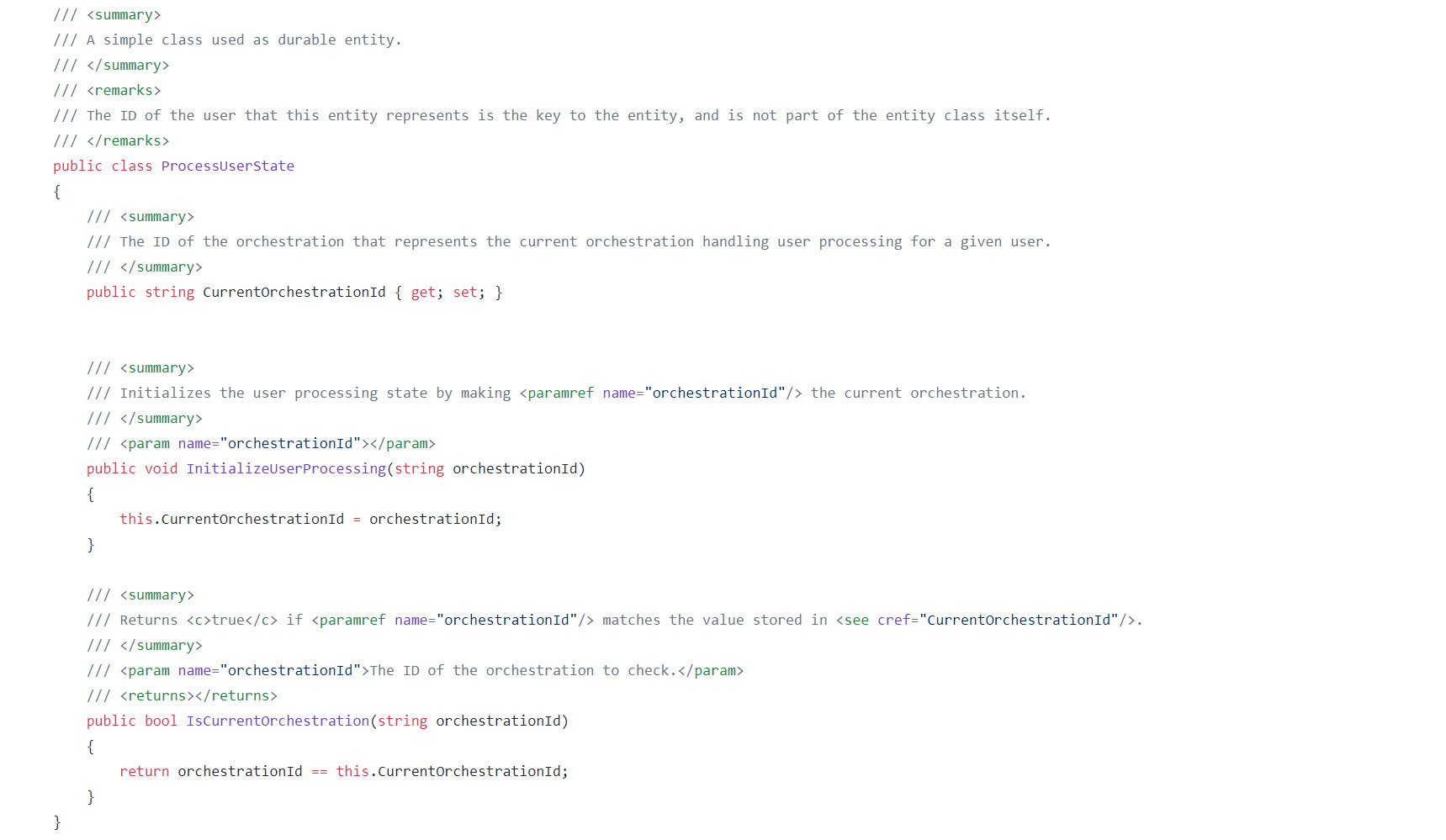

Have a look at the ProcessUserState class in my sample code.

Using a Durable Entity

So, how do you use a Durable Entity then? That’s pretty simple.

- Create a reference to the entity as an

EntityIdstruct - Call an entity operation (method) using the

CallEntityAsyncorSignalEntitymethods on the orchestration context.

When you use the CallEntityAsync method, your orchestration will wait for the result of the entity operation. The SignalEntity is a fire-and-forget kind of operation. Your orchestration will continue regardless of the output of the entity operation.

Have a look at the sample code on how to call an operation on an entity class. Note that I use nameof([class name].[method name]) to specify the name of the method representing the operation. This way I don’t have to store any “magic strings” in my code. I also get a compilation error in case I decide to rename the method on the entity class.

Use Cases for Entity Functions

The use case I’ve described in this article is just one example that I think is quite common. However, there are several other use cases in which Durable Entities can bring a lot to the table.

It might be caching data, accessing other resources or keeping state for various processes, and much more. When you evaluate whether Entity Functions can be a good option for a particular use case, please consider the following constraints that apply to Durable Entities.

- All calls to any operation on an entity instance are executed serially. This will give you reliability benefits with performance implications.

- Entity classes must be JSON serializable.

- Arguments and return values on entity operations must also be JSON serializable.

Summary

Durable Entities can be very helpful when building solutions that rely on Durable Functions. I described one such use case in this article. But I hope this article has also helped you realize the potential of Durable Entities along with the restrictions that apply to them.

2 Comments

Carlos · September 29, 2021 at 21:08

Great article!. One question: what if I need to save large amounts of state? Would a durable entity be a good fit for that scenario?

Mika Berglund · September 30, 2021 at 07:50

I did not find any definite information about size limits for Durable Entities (or Durable Functions). However, Durable Functions (including Durable Entities) use Azure Storage accounts for storage, so I assume you can’t store more data with Durable Entities than you store with tables in a storage account. This is on a theoretical level though.

However, since there is a lot of serialization and deserialization going on between every function call, I guess your performance will be very poor way before you hit any storage limits. So, what I typically tend to do is to store any potentially large data outside of the durable entity, and store just some pointers like IDs or similar to other data that is associated with each entity, and store that large data in another data storage and get that only when you need it.

Finally, I would probably do some testing first if I had concerns about hitting storage limits or similar. That applies to systems development in general, not just Durable Functions and Durable Entities.