Durable Functions Pitfalls in Azure Functions

Durable Functions is an extension to Azure Functions that allows you to write long-running workflows in code. I will not go in much detail on Durable Functions. Instead I will simply point you to this article that gives you a detailed description. In this article, I will talk about the pitfalls that I’ve fallen into several times when working with Durable Functions. Naturally, you can also consider it as best practices for Durable Functions to avoid these pitfalls. I hope that with this article I can help you along the way to perfecting your Durable Functions applications.

Brief Description of Durable Functions

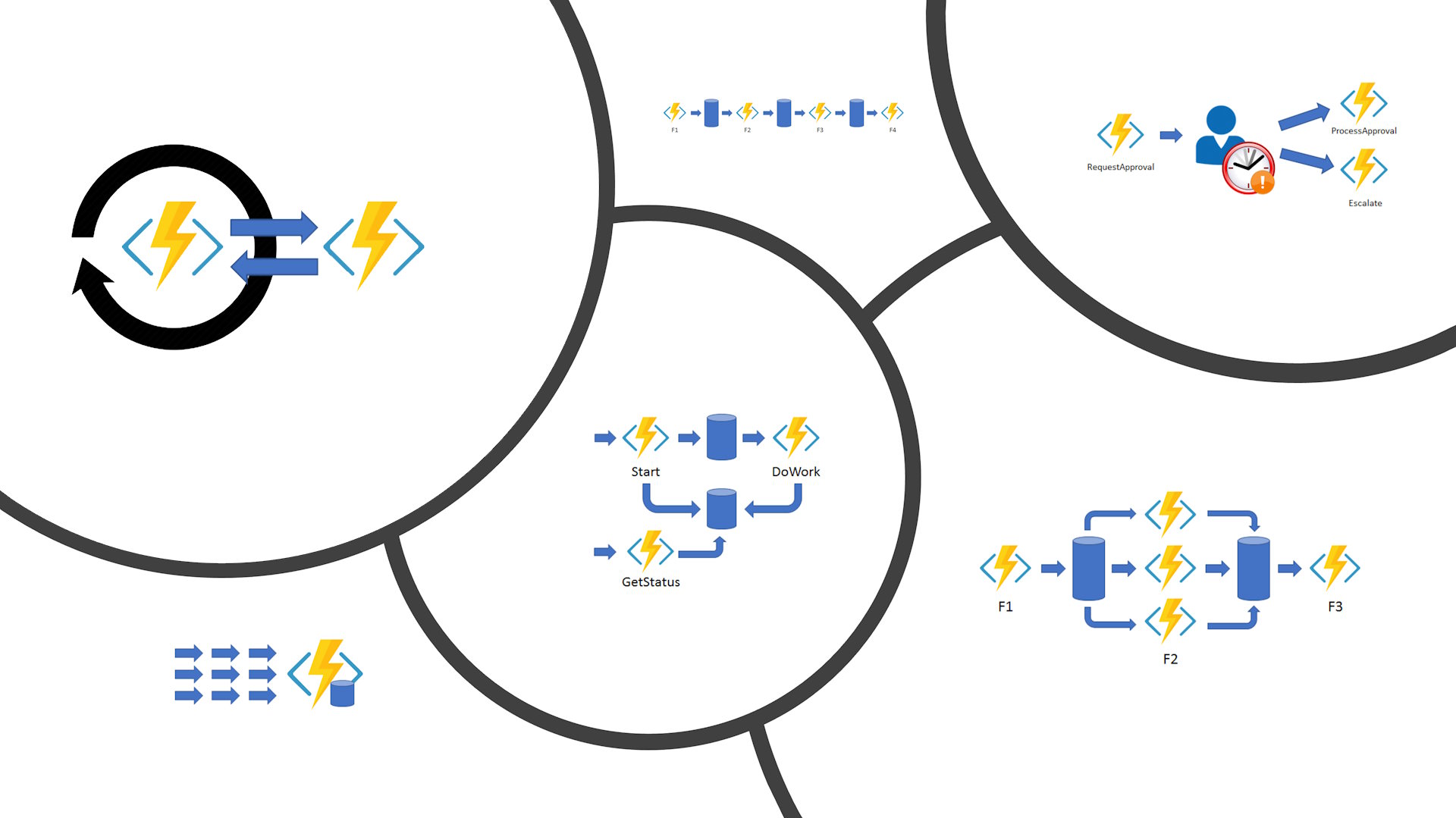

I will start with a little bit of explanation about what Durable Functions is. Just to set the context of this article. But it’ll be brief. There are three different types of functions, with different features, responsibilities and constraints.

- Orchestration Functions – The backbone of your code based workflows where you define the logic

- Activity Functions – The workhorse of your workflows where you do all of your “heavy lifting”

- Entity Functions – Allows you to store objects with data and are accessible to your orchestration functions

In this article I’ll focus on orchestration functions, since that is where the most pitfalls are. Orchestration functions are also the type of Durable Functions with the most constraints and potential pitfalls. I will cover entity functions in a future article, or update this one.

Orchestration Functions Must Be Deterministic

Orchestration functions MUST be deterministic! This is probably among the first pitfalls you will fall into when working with Durable Functions. Especially if you don’t understand this properly. This means that when an orchestration function executes with the same input, it must always produce the exact same result. I can’t stress the importance of this enough! For me, this is by far the biggest reason for running into problems with durable functions. If you are lucky, you get a runtime exception if your function violates this constraint. However, in my experience, your function just behaves in a very weird way and you spend a lot of time trying to figure out what the problem is.

OK, so what does this mean in practice? It means that each and every line of code in your orchestration must produce exactly the same result with the same input. Even if the line of code runs multiple times. And that is exactly what happens in orchestration functions. The Durable Functions runtime potentially replays an orchestration function several times. That is the price we have to pay for durability.

Let’s Look at Some Code

Let’s take a closer look at how this happens in practice. Take the very simple sample code below. It is just some meaningless code to describe the problem that you may run into.

[FunctionName(nameof(MyOrchestration))]

public async Task MyOrchestration([OrchestrationTrigger] IDurableOrchestrationContext context)

{

Guid id = Guid.NewGuid();

string id1 = context.CallActivityAsync<string>(nameof(MyActivity1), id);

string id2 = context.CallActivityAsync<string>(nameof(MyActivity2), id);

bool result = id1 == id2;

}

[FunctionName(nameof(MyActivity1))]

public async Task<string> MyActivity1([ActivityTrigger] Guid input)

{

return input.ToString();

}

[FunctionName(nameof(MyActivity2))]

public async Task<string> MyActivity2([ActivityTrigger] Guid input)

{

return input.ToString();

}

Now when the MyOrchestration function runs, it executes each line of code, until the first activity function (or entity function) call on the orchestration context is encountered. At that time, the durable functions runtime pauses the orchestration, and schedules the activity function with its input for execution. The runtime then executes these scheduled function in a first-come-first-served manner. So it may take quite a while before your activity function executes. There might be other orchestration instances running that have scheduled activity functions for execution. That all depends on how much load your application is experiencing. But eventually it will execute. That’s the promise of Durable Functions.

When the activity function eventually executes and returns, the runtime will replay the orchestration function, and execute each line of code again. From the beginning! The orchestration function then executes until it encounters the next activity function, and does the same thing. This is called replaying.

What Happens During Replaying?

To give you a concrete understanding of what replaying means, we can have a look at the sample code above. I’ll list the lines in the order of execution in the list below (skipping the function signatures and other irrelevant lines).

- Line #4: The variable

idis assigned a value. - Line #5: The orchestration encounters an uncalled activity function and schedules it for execution.

- Line #14: The activity function executes and returns a value (the value assigned to the

idvariable in step #1). - Line #4 (2nd run): The orchestration replays for the first time and assigns a value to the

idvariable. Note! This value is different from the value in step #1. - Line #5 (2nd run): The orchestration encounters an activity function that it called previously. The runtime returns the value from the execution history of the orchestration function without calling the activity function.

- Line #6: The orchestration encounters another uncalled activity function, and schedules it for execution.

- Line #20: The second activity function executes and returns a value (the value assigned to the id variable in step #4.

- Line #4 (3rd run): Again, the orchestration function starts from the beginning, and assigns yet another value to the

idvariable. - Line #5 (3rd run): The orchestration encounters an activity function that it previously executed, and the orchestration context returns the result of that function call from the execution history.

- Line #6 (2nd run): Another line of code that the runtime replays and returns the value from the execution history.

- Line #8: The orchestration compares the results of both activity functions.

In short, the executed code lines are (replayed lines are bolded): 4, 5, 14, 4, 5, 6, 20, 4, 5, 6, 8.

As you probably have guessed, the result variable is false, and not true, as you normally would expect. This is the result of replaying. It will come back and bite you, if you don’t keep this in mind when working with Durable Functions.

How to Make Your Orchestration Functions Deterministic

In my code example above I used a Guid to demonstrate how your function can go wrong without any runtime exceptions. The same goes for timestamps and random numbers too, just to mention a few. For instance, DateTime.UtcNow returns a different value each time you call it. The Random.Next method also returns a different value each time. It wouldn’t be random otherwise, now would it?

Luckily you can fix some of these quite easily with the help of the orchestration context. The IDurableOrchestrationContext.CurrentUtcDateTime property returns a value that is guaranteed to be deterministic. The same goes for the IDurableOrchestrationContext.NewGuid method.

However, the orchestration context does not support generating random numbers. That’s no problem though. You just need to wrap your random number generation in an activity function, and you are good to go.

[FunctionName(nameof(MyOrchestration))]

public async Task MyOrchestration([OrchestrationTrigger] IDurableOrchestrationContext context)

{

var rndInt = await context.CallActivityAsync<int>(nameof(), new Tuple<int, int>(1, 10));

// rndInt is the same regardless of how many times the orchestration is replayed.

}

[FunctionName(nameof(GenerateRandomIntActivity))]

public async Task<int> GenerateRandomIntActivity([ActivityTrigger] Tuple<int, int> input)

{

var rnd = new Random();

return rnd.Next(input.Item1, input.Item2);

}

I hope that I have managed to emphasize the importance well enough with these examples. As I wrote above, I’ve spent countless hours on banging my head against anything hard around my desk trying to figure out why my application is not working as I planned. All too many times I found out that the root cause to my problems was that one of my orchestration functions I wrote was not behaving in a deterministic fashion.

Async Function Calls in Orchestration Functions

Another one of the Durable Functions pitfalls that have cause me headaches all too many times is that you MUST NOT call async methods in orchestration functions. The only async methods that you are allowed to call are the methods that the orchestration context defines. These functions mainly allow you to work with orchestrations, sub-orchestrations, activities and entity functions.

If you need to call any other async methods like accessing a database, you need to wrap that logic into an activity function. You cannot call the async method directly from your orchestration function.

In earlier version of Durable Functions, this used to be the case also for HTTP requests. Luckily there is now an async method on the orchestration context that helps with HTTP requests. The CallHttpAsync method allows you to do HTTP requests directly in your orchestration functions.

So, whenever you call an awaitable method in one of your orchestration functions, make sure that those calls are all going through the orchestration context.

Looping in Orchestration Functions

Depending on how you structure your code, iterating over a collection of data in an orchestration function can either follow the function chaining pattern, or the fan-out / fan-in pattern. Especially if you call activity functions in each iteration one by one, you may run into serious performance issues. This is particularly true when your activity function returns a large set of data. This is also one of the Durable Functions pitfalls that may require you to restructure your code pretty much to avoid.

Let’s start with an example. Suppose you are writing an application that processes a collection of cities and stores the hourly weather forecasts in each city for the next day in your database. You use your own database to store the cities and an external weather service to get the forecasts from. The simplified sample code below shows the setup.

[FunctionName(nameof(ProcessCityForecastsOrchestration))]

public async Task ProcessCityForecastsOrchestration([OrchestrationTrigger] IDurableOrchestrationContext context)

{

var cities = await context.CallActivityAsync<IEnumerable<City>>(nameof(GetCitiesActivity));

foreach(var city in cities)

{

var forecasts = await context.CallActivityAsync<IEnumerable<Forecast>>(nameof(GetCityForecastsForTomorrowActivity), city);

// Here we would then store the forecasts, but we'll skip that for simplicity.

}

}

[FunctionName(nameof(GetCitiesActivity))]

public async Task<IEnumerable<City>> GetCitiesActivity([ActivityTrigger] IDurableActivityContext context)

{

IEnumerable<City> cities;

// Read the cities from your database.

return cities;

}

[FunctionName(nameof(GetCityForecastsForTomorrowActivity))]

public async Task<IEnumerable<Forecast>> GetCityForecastsForTomorrowActivity([ActivityTrigger] City city)

{

IEnumerable<Forecast> forecasts;

// Use an external weather service to get the weather forecast for the given city.

return forecasts;

}

This code works, but is far from optimal. As the number of cities get bigger, there is more and more replaying taking place. Remember that for every activity that the orchestration encounters, the orchestration schedules the activity for execution. And when that activity completes, the runtime replays the orchestration from the start.

Replaying also involves deserializing data returned by previously executed activity functions. So, for every city in the iteration in the sample code above, there is one more activity function that already ran and returned weather forecasts. And each time, the runtime will deserialize the forecasts for the orchestration function to use.

Deserializing is generally pretty quick, but if the amount of data that is deserialized increases each time you go over an iteration, and do many iterations, it starts to slow your application down more and more. And the decrease can be very dramatic!

Looking at the Numbers

Imagine that you have 1000 cities in your database. For every city, you get 24 hourly forecasts for the next day. That is 24 000 weather forecasts each day. For the sake of simplicity, let’s also say that it takes 10 milliseconds to deserialize one hourly weather forecast.

Note! The call to the

GetCitiesActivityactivity function will also cause the list of cities to be deserialized for every replay, but that does not get slower for every iteration. The time is constant for every replay, which is why I excluded that from this numbers game to keep this sample as simple as possible.

So, for the forecasts returned for the first city, the deserialization will take 24 * 10 ms = 240 ms. That’s not bad. For the second city, the orchestration is replayed, and the foreach loop is played from the beginning. So to get the forecasts for the second city, we also have to deserialize the forecasts for the first city. Even though we already saved them during the first iteration. In other words, that deserialization is completely unnecessary.

Deriving from this, the time it takes to deserialize the forecasts for the second city is 24 * 10 ms + 24 * 10 ms = 480 ms. Still not that bad, but you start to get the picture, right?

Now fast forwarding to the 100th city. Before we can even get to call for the forecasts to the 100th city, we would have to deserialize the forecasts to 99 cities. With our sample values that would sum up as 99 * 24 * 10 ms = 23 760 ms. That is over 23 seconds of deserializing, all of which is unnecessary.

Getting Really Ugly

OK, so what’s the situation at the 500th city. That would mean that you’d have to deserialize the forecasts of 499 cities, all in vain. And that would cost you 119 760 ms, which is almost 2 minutes.

Remember that for every iteration except for the first one, you are doing increasingly more and more unnecessary deserialization.

The last iteration for the 1000th city, the iteration would take 4 minutes! And that’s just the last iteration!

Improving Performance

Fortunately there is quite a lot you can do to improve on the performance. The key is to avoid repeating unnecessary deserialization. Instead of having one main orchestration that would first get the forecasts with one activity function and then store them with another, you would make use of sub orchestrations and the fan-out/fan-in pattern. The sub orchestration would be responsible for getting and storing the forecasts for one single city. The code below shows how you would structure this.

[FunctionName(nameof(ProcessCityForecastsOrchestration))]

public async Task ProcessCityForecastsOrchestration([OrchestrationTrigger] IDurableOrchestrationContext context)

{

var cities = await context.CallActivityAsync<IEnumerable<City>>(nameof(GetCitiesActivity));

var taskList = new List<Task>();

foreach(var city in cities)

{

taskList.Add(context.CallSubOrchestratorAsync(nameof(StoreSingleCityForecastsOrchestration), city));

}

await Task.WhenAll(taskList);

}

[FunctionName(nameof(StoreSingleCityForecastsOrchestration))]

public async Task StoreSingleCityForecastsOrchestration([OrchestrationTrigger] IDurableOrchestrationContext context)

{

var city = context.GetInput<City>();

var forecasts = await context.CallActivityAsync<IEnumerable<Forecast>>(nameof(GetCityForecastsForTomorrowActivity), city);

// Here we would then store the forecasts for the given city.

}

[FunctionName(nameof(GetCitiesActivity))]

public async Task<IEnumerable<City>> GetCitiesActivity([ActivityTrigger] IDurableActivityContext context)

{

IEnumerable<City> cities;

// Read the cities from your database.

return cities;

}

[FunctionName(nameof(GetCityForecastsForTomorrowActivity))]

public async Task<IEnumerable<Forecast>> GetCityForecastsForTomorrowActivity([ActivityTrigger] City city)

{

IEnumerable<Forecast> forecasts;

// Use an external weather service to get the weather forecast for the given city.

return forecasts;

}

The performance improvements in this example come from two separate areas. First of all, the cities collection on line #4 is deserialized only two times, and not once for every city. The first time when the GetCitiesActivity function is called for the first time, and the second time when the orchestration is replayed after returning back from line #11. So 2 times instead of 1000 times.

The second performance improvement, the most significant, comes from the fact that each weather forecast is deserialized only one time. Fanning out like this dramatically decreases the number of replays, thus cutting down the time spent on doing meaningless deserialization. What this means in practice is that the iteration on lines 7-10 runs in one go, since we don’t call any sub orchestration function with the await keyword. Instead, we create a collection of tasks representing the orchestration function calls, and then await on them all together on line #11. At that point, the main orchestration would pause and wait for all of the sub orchestration functions to run and return before continuing.

You don’t have to use sub orchestrations to fan out. You can do that with activity functions as well. In this example I was just using sub orchestrations, because the sub orchestration then was calling two separate activity functions.

Cleaning up Function History

Durable Functions provides a high level of resilience towards unexpected incidents. These can be network problems, application crashes or something similar. This is achieved using persistent storage to store all orchestration, activity and entity function calls before actually performing the actions. This allows Durable Functions to recover from virtually any incident, except for deleting the storage. Even if you delete the application and recreate it and connect it to the same storage, your functions will continue running.

Over time, this storage fills up, because Durable Functions does not clean it up. This really hit me this summer when we started experiencing a lot of errors in a somewhat busy Durable Functions application that we are building. These errors were then multiplied because we used a retry policy on most of our activity calls that retried the call several times. So the work items in the history table really started piling up. At some point the storage account was the resource that incurred most of the costs in the resource group hosting our application. This was really a new situation for me, since typically storage accounts in Azure cost peanuts. At some point I think our history table was using over 100 GB!

Add Your Own Cleaning Logic

Fortunately there is quite an easy way to take care of this. The Durable Functions client defines a method, PurgeInstanceHistoryAsync, that you can call regularly to clean up the history table. The simplest way to call this is to use a timer trigger, as shown in the code below.

[FunctionName(nameof(CleanOrchestrationHistoryTimer))]

public async Task CleanOrchestrationHistoryTimer([TimerTrigger("0 0 20 * * *")] TimerInfo timer, [DurableClient] IDurableOrchestrationClient client)

{

var statusList = new List<OrchestrationStatus> { OrchestrationStatus.Terminated, OrchestrationStatus.Completed, OrchestrationStatus.Canceled };

await client.PurgeInstanceHistoryAsync(DateTime.UtcNow.AddYears(-2), DateTime.UtcNow.AddDays(-14), statusList);

}

This would run your cleanup routine every day at 20:00 UTC. It would clean up all of your history that is newer than 2 years, but older than 14 days. This would remove all instances that are marked as completed, terminated or cancelled. If you would like to clean out other statuses as well, then you can just add those statuses to the statusList collection.

To make this more robust, you could or course wrap your cleaning in an activity function that you call from an orchestration function. Then use the timer trigger to start a new orchestration instance. The code below shows you how to do that.

[FunctionName(nameof(CleanOrchestrationHistoryTimer))]

public async Task CleanOrchestrationHistoryTimer([TimerTrigger("0 0 20 * * *")] TimerInfo timer, [DurableClient] IDurableOrchestrationClient client)

{

await client.StartNewAsync(nameof(CleanOrchestrationHistoryOrchestration));

}

[FunctionName(nameof(CleanOrchestrationHistoryOrchestration))]

public async Task CleanOrchestrationHistoryOrchestration([OrchestrationTrigger] IDurableOrchestrationContext context)

{

await context.CallActivityAsync(nameof(CleanOrchestrationHistoryActivity), null);

}

[FunctionName(nameof(CleanOrchestrationHistoryActivity))]

public async Task CleanOrchestrationHistoryActivity([ActivityTrigger] IDurableActivityContext context, [DurableClient] IDurableOrchestrationClient client)

{

var statusList = new List<OrchestrationStatus> { OrchestrationStatus.Terminated, OrchestrationStatus.Completed, OrchestrationStatus.Canceled };

await client.PurgeInstanceHistoryAsync(DateTime.UtcNow.AddYears(-2), DateTime.UtcNow.AddDays(-14), statusList);

}

Renaming Functions

Sometimes you need to refactor your code, and restructure it. This can sometimes also include renaming methods. Under normal conditions, renaming a method is not that big of a deal. But with Durable Functions renaming functions may cause you trouble. Perhaps not one of the deepest Durable Functions pitfalls, but at least it is something you need to keep in mind.

As implied earlier, all function calls in Durable Functions are logged and stored in a history table. Each row in this table contains the name of the function, its input and output, and some other things.

When your orchestration function runs, it schedules all sub orchestrations and activities to be run. If you stop your application before they had the chance to run, then they will run after you restart your application.

Now if you stopped your application in order to update it with a refactored version where you have changed the name of a function that was scheduled for execution, there is no way that the Durable Functions runtime would know what your function is called in the updated version. This would lead into a runtime exception, and the function that the previous version had will never run.

Considerations for Renaming Functions

There is not very much you can do about this other than not to rename your functions. That’s not a good solution though. I have not had that much of a problem with this, but what I typically do is that I leave the old function as is, and write a new one with the improved functionality, and give it a new name. I typically also mark the old function as obsolete just so that I have the help of the compiler to find that function at a later time. Then update the application and let all instances pointing to the old function run out. You can then delete the old function in a future update after you’ve made sure you don’t have any instances of the old function running.

Be aware though, that orchestrations can last for a very long time. As I’ve understood it, there is not limit for how long an orchestration can last. For instance, if you wait for an external event in your orchestration, there is no upper time limit for how long the orchestration can wait for the event. You can also use durable timers to create a delay in your orchestration. Durable functions implemented in .NET support arbitrary long timers.

Monitoring Durable Functions

So how would you know what functions are running? Luckily there is an extension to Visual Studio Code called Durable Functions Monitor. With this extension you can easily connect to the task hub of your Durable Functions application and find the orchestrations you want by filtering and sorting the list of orchestrations.

With the extension you can then work with each orchestration instance and get a better view of what it has done and what is still is going to do. You can also perform actions on the orchestration with this tool such as suspend and resume, terminate and purge, just to mention a few. There’s a lot more you can do with this extension, so be sure to install it. Personally, I wouldn’t do any Durable Functions development without this tool anymore.

Examples From My Personal Experience

Now that you’ve come this far with this article, I think you deserve to get a reward for that. This time, the reward is in the form me describing perhaps my most embarrassing examples of falling into the pitfalls that I’ve described in this article. Have fun reading 😉

Creating New User Accounts

This is perhaps one of the problems that I spent the most time on trying to figure out why the heck my app was not working. It was many years ago when I was working on an Extranet solution for residents. We had a feature where residents could sign up using their Finnish bank IDs. In order not to have residents use bank IDs every time they logged in, we created a user account with their e-mail and a generated password, that we then sent to the given e-mail. The residents then used their e-mail and the generated password to log in the following times. Of course they had to change the password on first login, of course.

Already back then I was a fan of Durable Functions. And I still am. So I decided to implement the user account creation feature using Durable Functions. I created an orchestration function that called one activity function to create the user account with a random password. Then call another activity to send that e-mail address and password in an e-mail to the resident. The code below is a much simplified version of that logic.

Sample Code

[FunctionName(nameof())]

public async Task CreateAndSendUserAccountOrchestration([OrchestrationTrigger] IDurableOrchestrationContext context)

{

var email = context.GetInput<string>(); // Assume the e-mail address is sent as input to the orchestration.

var pwd = this.GenerateRandomPassword();

var accountInfo = new Tuple<string, string>(email, pwd);

await context.CallActivityAsync(nameof(CreateUserAccountActivity), accountInfo);

await context.CallActivityAsync(nameof(SendUserAccountActivity), accountInfo);

}

[FunctionName(nameof())]

public async Task CreateUserAccountActivity([ActivityTrigger] Tuple<string, string> input)

{

// Here we would use the username and password sent in the input to connect to our user registry

// and create a new user account.

}

[FunctionName(nameof())]

public async Task SendUserAccountActivity([ActivityTrigger] Tuple<string, string> input)

{

// Here we would take the username and password from the input, add that information to an e-mail template

// and send it to the given e-mail address.

}

private string GenerateRandomPassword()

{

string pwd;

// Use System.Random or some other mechanism to generate a random password and return it.

return pwd;

}

Very innocent looking code, don’t you think? But since you’ve now read this article, you probably immediately spot the problem. We’ll I did not! I got the user account created, and the e-mail was sent properly. But when I tried to log in, I got a login failed error. Every time! Incorrect username and/or password!

A Very Easy Solution

I don’t remember how many times I deleted the generated user account and retried the whole thing. The same result every time. I could not understand what was going wrong. It was really a mystery. I even used break points in the activity functions to step through them. Yes, my code was in fact creating a user account, and yes, my code actually sent the e-mail. There was only one orchestration instance running at a time. I made sure of that to ensure that there was no mixing of data between orchestration instances.

It wasn’t until I started looking closer at the inputs to the activity functions that I pretty quickly realized what the problem was. My code generated the password twice! One password that I used to create the user account with. And another password that I sent out in the e-mail. Afterwards, I’ve been trying to figure out how on earth did I not notice that earlier. Can’t say for sure, but the only thing I can think of is that the call to the GenerateRandomPassword() method looks so innocent, since it is not an async function. Well, we all know better now, don’t we?

So, I simply wrapped the password generation logic in an activity function. The problem was solved with just a few additional lines of code. I am still beating myself over this, and how could I have been so blind. Enough time has now elapsed, and at least I can now share it as a funny lesson.

Further Reading

Here is a list of links that I think provide you with additional information when working with Durable Functions. Hopefully you have found this article useful.

1 Comment

Kjetil Hamre · January 3, 2024 at 16:11

Brilliant article. Love it!